Cazena is proud to publish this exclusive insight from Matt Aslett, 451 Research Vice President for Data, AI and Analytics.

Despite criticism, the data lake has emerged in the last decade as a solution to managing large volumes of data, and is relatively widely adopted. While simple on paper, the data lake is a lot more difficult to deliver in practice, with multiple moving parts that can fragment without significant manual intervention.

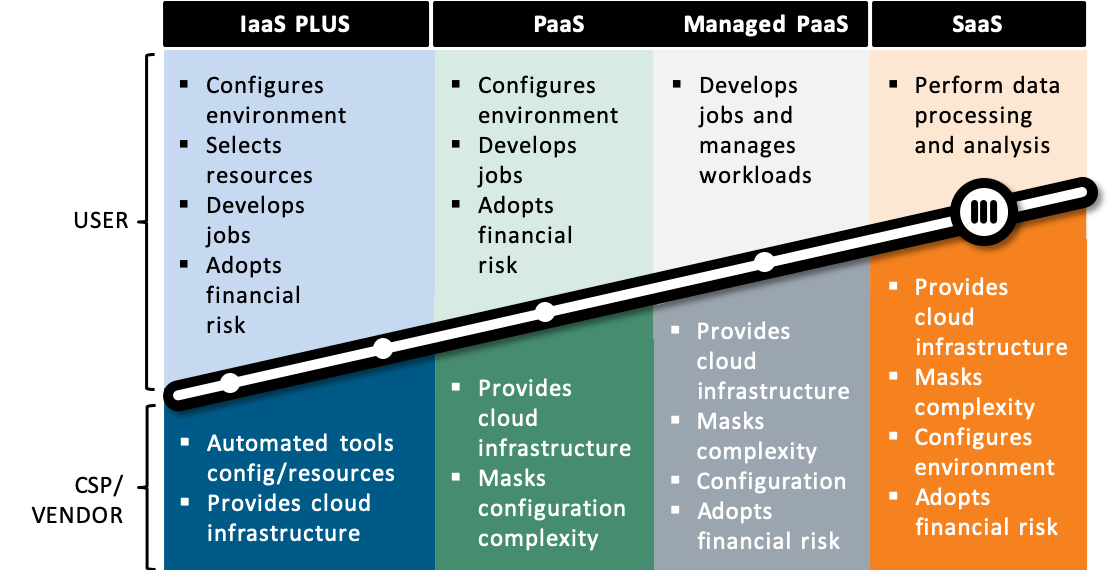

Data processing is shifting to the cloud, but IaaS and PaaS data lake environments still require a lot of manual intervention on the part of the user. True SaaS data lake environments mask the complexity, enabling users to concentrate on data processing and analysis rather than infrastructure management and software orchestration.

Why data lakes?

As companies of all shapes and sizes recognize the increasing importance of data and take steps to become more data-driven, one of the key challenges has been how best to rapidly convert large volumes of data into business insight.

It isn’t enough just to store more data from a greater variety of sources – not just from enterprise applications but also increasingly sensors and the Internet of Things, as well as AI and machine learning. The important thing is how you add knowledge and insight to turn that data into wisdom and unlock its value.

In the last decade, the concept of the data lake emerged as a new approach to storing, processing and analyzing large volumes of data – particularly the combination of structured and unstructured raw data in its native format – that can be accessed by multiple users for multiple purposes.

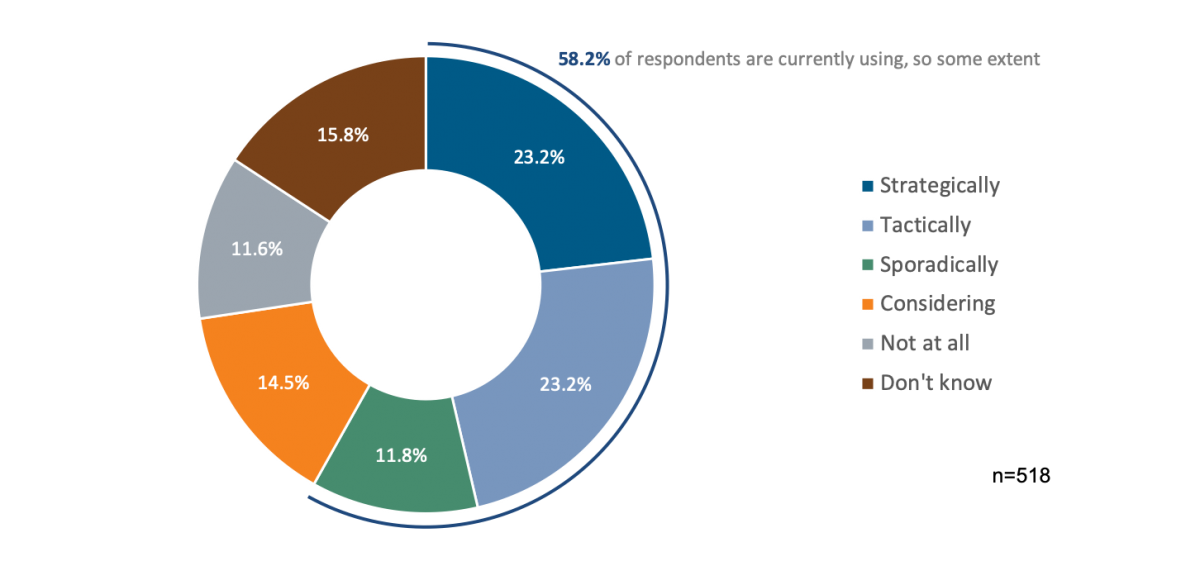

Data from 451 Research’s Voice of the Enterprise illustrates the growth in the adoption of data lake environments. More than 58% of respondents are using data lakes, to some extent, with a further 15% considering adoption.

451 Research: Adoption of a Data Lake Environment

Source: 451 Research

The term ‘data lake’ is credited to Pentaho’s founder, James Dixon, who first used it in a 2010 blog post in which he compared it to a large body of water that is fed from various source streams and that can be accessed by multiple users for multiple purposes. Dixon contrasted the data lake with a data mart, which could be considered the equivalent of bottled water: cleansed and packaged for easy consumption.

The idea was attractive but didn’t explicitly explain what a data lake was technically, or how to build one. Many early adopters set off ingesting data from multiple sources into Hadoop in the hope of proving value further down the line.

Without a clear idea of the requirements or use cases, however, instead of a data lake, several initial projects resulted in the creation of a data swamp – a single environment housing large volumes of raw data that couldn’t be easily accessed for any purpose, let alone multiple purposes.

A couple of years ago, 451 Research pointed out that what a ‘data lake’ failed to address, as an analogy, was how multiple users would access that data for multiple purposes, and offered an alternative analogy – the ‘data treatment plant’ – arguing that industrial-scale processes were required to make data acceptable for multiple desired end uses and the multiple methods for accessing and processing data.

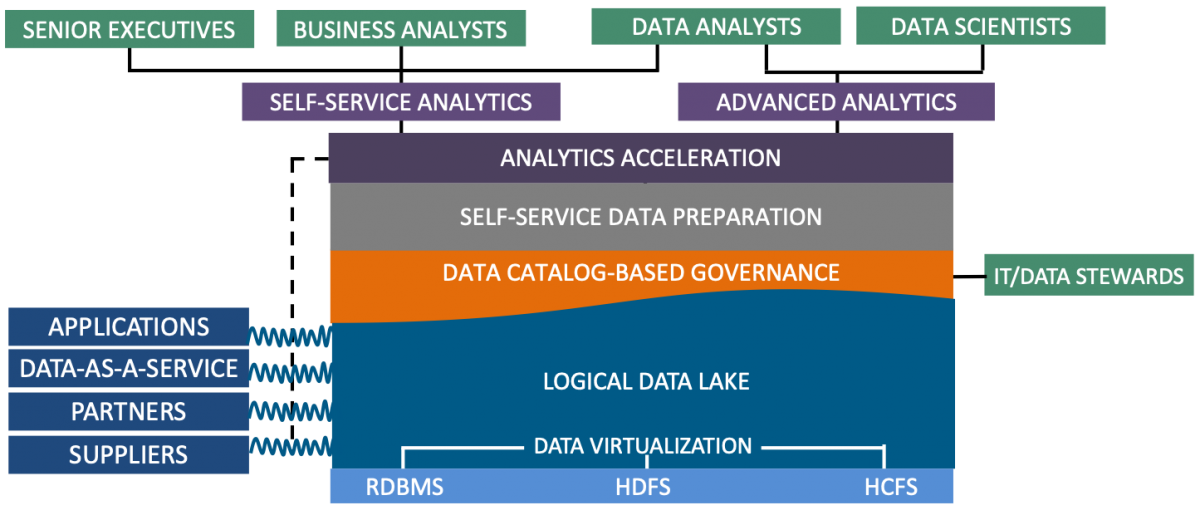

That wasn’t a battle we were ever likely to win, but while the term ‘data lake’ has taken off, greater emphasis is now being placed on those industrial-scale processes that are required to turn the data lake concept from theory into reality. This includes data governance functionality, including a data catalog, to create an inventory of what data is in the environment, and also self-service data preparation functionality via which users can discover, integrate, cleanse and enrich data to make it suitable for analysis.

The definition of a data lake has expanded over time, beyond Hadoop, to address Hadoop-compatible cloud storage, and also potentially relational databases as part of what might be considered a logical data lake, as well as optional capabilities such as data virtualization, analytics acceleration and stream-based continuous data integration.

451 Research: The Components of a Functioning Data Lake

Source: 451 Research

This is all well and good in terms of a theoretical architecture, but it’s a lot more difficult to deliver in practice. In particular, it is very brittle and has the potential to fragment if not held together with a lot of duct tape and manual intervention.

One potential solution is the cloud, and we have seen an increasing shift toward data workloads being deployed into cloud environments, in part because they offer the potential to lower the configuration and management complexity.

It is important to note, however, that all cloud-based data processing is not created equal. There are significant differences – in terms of the reduction in complexity – between IaaS, PaaS and SaaS environments.

IaaS certainly reduces the requirement on the user to configure and manage the underlying infrastructure, but the user still adopts the financial risk of selecting the most appropriate infrastructure resources, and the onus remains on the user to configure and orchestrate the software environment, as well as the data processing jobs.

PaaS reduces this configuration complexity, as well as the financial risk, but there is still a significant requirement on the user to assemble, configure and manage the relevant software services: many PaaS data lake offerings can best be thought of as ‘blueprints’ for assembling a data lake, rather than the finished article.

For enterprises without the in-house skills to manage and assemble a data lake themselves, SaaS provides a more appropriate option. The service provider takes on the responsibility (both technical and financial) for configuring, managing and orchestrating not just the underlying infrastructure, but also the software environment, enabling users to focus their attention on performing data processing and analytics: converting large volumes of data into business insight.

451 Research: Cloud data processing options.

Source: 451 Research

Conclusion & Resources

There are multiple technology choices for assembling and running data lakes environments. To learn more about navigating the options and recent advances in cloud technologies, explore the webinar and resources to learn more.