WEBINAR TRANSCRIPT

SaaS Data Lakes: Simplifying Cloud Data Lakes for Rapid Analytics

In this webinar, learn about recent advances in cloud data lakes that simplify and accelerate the enterprise journey. Explore the transcript below or watch the complete event here.

Presentations

- Data Lake Trends, Matt Aslett, 451 Research

- Introduction to Cazena’s SaaS Data Lake, Prat Moghe, Cazena

- SaaS Data Lake Case Study with CWT, Gordon Coale

- Data Lake Q&A with all panelists

Presentation Transcript: CWT’s SaaS Data Lake Case Study

Gordon Coale

Gordon Coale

Senior Architect

CWT (formerly Carlson Wagonlit Travel)

“Thank you very much, Prat. Really appreciate that introduction.

Just a little bit about me and CWT. We’re probably one of the biggest companies you’ve never heard of — unless you use one of our travel services. We are essentially a corporate travel agency. We service currently today around a third of the FTSE 500 and a third of the S&P 500, so we’ve got quite a big footprint.

But the effort is to stay in the background and just make your employees get from A to B. I love some of our statistics and some of these are actually calculated using our Data Lake. Every day, we handle enough hotel bookings to fill all the hotel rooms in Paris every night. At any point in time, there are probably 100,000 people having their travel managed by us, so that’s people that are either on a flight or in a hotel room or taking part in another travel activity.

As corporates go, we’re a medium-sized corporate, about 18,000 employees. Lots of those are in our call centers, which means our tech teams have to be quite more skilled and quite focused on delivering the right business outcomes.

One of the reasons I joined CWT is that I’ve had some background both in banking and previously in the travel industry.

Prior to joining CWT, for example, I was working for a major investment bank running a petabyte scale, on-premises Data Lake. One of the reasons CWT hired me was to try and make sense of their data efforts in general, and their Data Lake in particular. This isn’t my first rodeo.

I guess the difference this time is I had a partner in Cazena that made some of this — a lot of it actually! — much, much easier.

So, why did CWT choose a SaaS Data Lake? One of the reasons we did (and it was prior to my time here) is:

We tried it twice before, and it stalled, effectively. We got stuck. We tried it once with an on-premises solution, essentially a small team-sized Data Lake hiding in one of our equipment cupboards. Some of the more digital-savvy teams, about three or four years ago now, also tried AWS EMR. The main reason they got stuck was lack of skills.

These were talented DevOps teams for the most part, but they were not big data experts. They certainly were not Data Lake experts. So, what they quickly found was they got bogged down in the management of the lake itself and the time to go over these brittle components that Matt talked about earlier.

They found actually managing the lake was a barrier to doing their day job. These were general developers and software product people. Add on to that as well, we had some really strong business use cases coming up for both data science and the Data Lake. All of a sudden, we went from having the first iterations of our lakes being useful tools in our kit bag, to really needing to be able deploy production applications on our Data Lake.

We no longer had the time or luxury to learn the lake ourselves, to ramp up our skillsets, to hire all of those resources that Prat talked about that you need to run and build a Data Lake.

That’s a really missed part of this whole story, in my opinion. The average hiring time is often, in my experience, a three month mark from initial sourcing of the employee with job adverts, to actually onboarding an employee. That’s an automatically built-in time delay for anything you want to do in a material way to make your Data Lake work.

What we wanted to do was — we had some small sample datasets, and we didn’t have the lake to use them on. If we could concentrate our recruitment to focus on engineers and data scientists, then we could worry about the data itself and not about the infrastructure.

The other reason we chose partnering with Cazena is compliance. Now, whilst travel isn’t as heavily regulated as a bank, we still have regulators to answer to. We still have our customers to answer to, and we still have our internal CISO functions to answer to.

Bearing in mind we started this journey with Cazena roundabout three years ago now, it was a very different compliance environment and security environment. We had to reassure our CISO teams about being a relative first mover in reasonably large scale cloud infrastructure — that we had done the due diligence or were working with a partner that had done the due diligence for us. After a fairly diligent search, that’s what we came to the conclusion was that working with Cazena was going to deliver that reassurance.

We are in a different place now, and some of you will possibly have less of that challenge.

There are a lot of organizations out there that do have a risk-averse environment. Partnering with someone like Cazena will help smooth that journey just by the certifications they can show you, the mature conversations they can have with the CISO teams. It helps to smooth the worries out of the way.

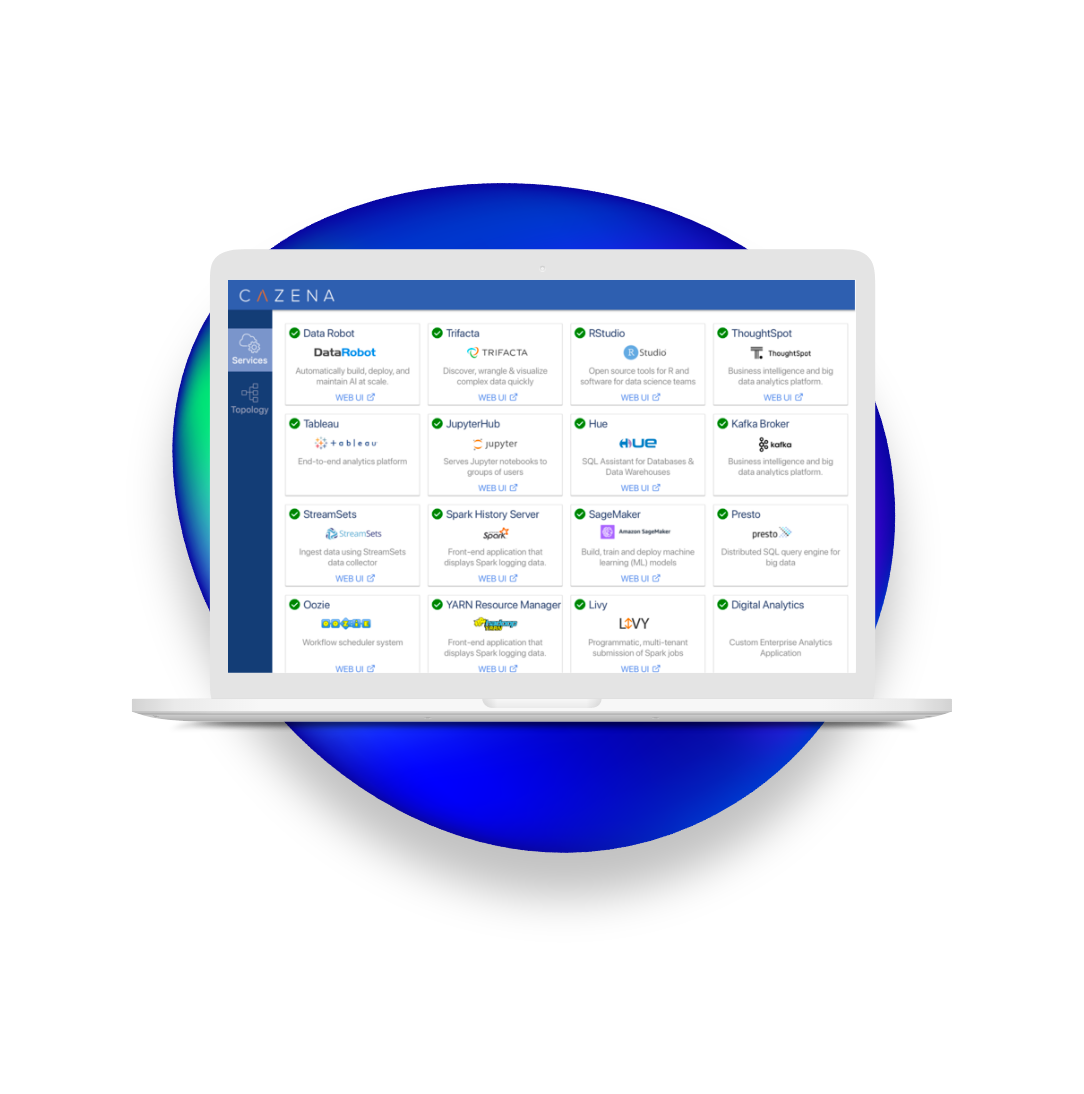

So, what did we achieve with Cazena? We’re fairly mature now, so most of this infrastructure you can see on this slide has been in place for at least 18 months, if not longer.

What we’ve been able to do with Cazena is massively extend the amount of use cases we surface.

Before we deployed our Data Lake, we essentially had a kind of traditional enterprise data platform that you can see on the left-hand side there, with a stereotypical data quality gateway, a data warehouse, reporting and some ETL running it. All the kind of classic enterprise software and solutions you would get in this space.

As an architect, this might look quite complex or maybe overly-complex.

But the important thing is each one of these is addressing a dedicated business purpose –all coordinated, managed, protected, and effectively sanity-checked by the Cazena Data Lake service.

We’ve been able to deploy new ETL tools, new analytic engines, and essentially both a hardcore team of data scientists and an additional layer of more ad-hoc or business-oriented data scientists, all of whom come to the lake and use it day in, day out — either to do ad-hoc data science or to work building production, data science products.

Part of what you saw mentioned earlier is this concept of the AppCloud. We’ve worked through a number of different data science platforms with Cazena, finding the one that fit best for us. We’ve worked with Anaconda. We’re currently working with JupyterHub, all searching out what hits our sweet spot for data science productivity. We’ve even gone to the point where we’ve actually got a secondary data warehouse nestled up against our Data Lake for some very specific OLTP-type processes.

It’s been a heck of a journey. Probably this has taken 18 months, but the important thing about that is that was for deployment of applications that had immediate business benefit. The infrastructure deployment, which you can see on my next slide hopefully, took much less time. So, we started this journey roughly at the beginning of 2017.

We engaged Cazena, and we initially deployed Cazena very quickly, within a quarter, on Azure. The interesting story, that we both love to tell on this part, is actually we were pretty much ready for Cazena to deploy on that first red dash you see there. The rest of it was actually contractual negotiations with the lawyers involved, and the to and froing of the dotting the I’s and crossing the T’s. The actual infrastructure was sitting there waiting for us. Prat and the team just kept reminding us that, so we would speed up the lawyer conversations, just so we could get productive!

We certainly met that four-week turnaround that Prat was talking about. We were able to have our first production batches running on the lake.

Effectively what we did — we cloned our enterprise data mart as the core of a more agile data platform on our Data Lake. That was done within that four-week window. We then went on a capability expansion program deploying RStudio, Anaconda, doubling the size of the cluster, which was absolutely seamless, putting this secondary database in, Greenplum, switching on functionality like Kudu.

And then, this is something that’s incredibly rare in my experience, we actually hopped cloud providers.

So we were on Azure. We chose Azure for what felt like good business reasons at the time. GDPR was coming up. We looked for a European instance. We didn’t have a preferred cloud vendor at that point, so we effectively felt that Azure was a safe choice. Roll the clock forwards a year, and the company had made a strategic cloud vendor decision. That was AWS and it was, “oh, lordy, we’ve chosen the wrong core platform. What do we do about this?”

Well, actually we talked to our partner at Cazena and they arranged a seamless migration between clouds for us. I cannot overstate the value that they were able to give to us in that migration. It was seamless. We lost no business continuity. We did it all over the space of a weekend.

We went from a lake running on Azure to a lake running on AWS with no hiccups through our business.

That was an amazing experience, and it was a real privilege to be part of such a professionally run operation. The great thing is it cost me barely nothing — it didn’t even cost me a sleepless night or two! It was just so well-managed from our partners, that we all we had to do was get involved in the targeting operations of it, you know – how we move users, how we advise our users. The rest of it was done with our Cazena partners.

Where we’ve ended up in the space of 20 months is — not only did we deploy our first cloud Data Lake, we deployed our second cloud Data Lake on a completely different provider.

We’ve got lake batches running. We’ve got feeds coming in from our mobile apps, our data science studios — various different ones. We’ve also got an interesting new BI tool being fed completely from the lake. We’ve also got revenue-earning, go-to-market products deployed and consuming lake data.

We managed to dodge that data swamp that Matt talked about earlier because we were able to work with Cazena and use our Data Lake in a targeted way. I think that’s the key business benefit that we’ve had from Cazena. It’s flexibility, it’s lack of worry.

We don’t have any of those five roles Prat talked about in-house. They’re all with Cazena. We have a big data admin, who is part-time and his only real worry is provisioning users. Because obviously, we have to do that internally. We have to make sure that a particular user has access to particular data and it’s done in an approved way, so that’s not something we could offshoot to Cazena. But all the rest of it is managed by Cazena.

It’s been slick, seamless, and a pleasure to be able to deliver all this business benefit, with such little worry about the infrastructure and all the surrounding services.