WEBINAR TRANSCRIPT

SaaS Data Lakes: Simplifying Cloud Data Lakes for Rapid Analytics

Data lakes are critical for every organization, offering a complete environment for analytics and digital transformation. But data lakes are complex and challenging – especially for IT teams that lack Cloud, DevOps & SecOps skills. In this webinar, learn about recent advances in cloud data lakes that simplify and accelerate the enterprise journey. Explore the transcript below or watch the complete event here.

Presentations

- Data Lake Trends, Matt Aslett, 451 Research

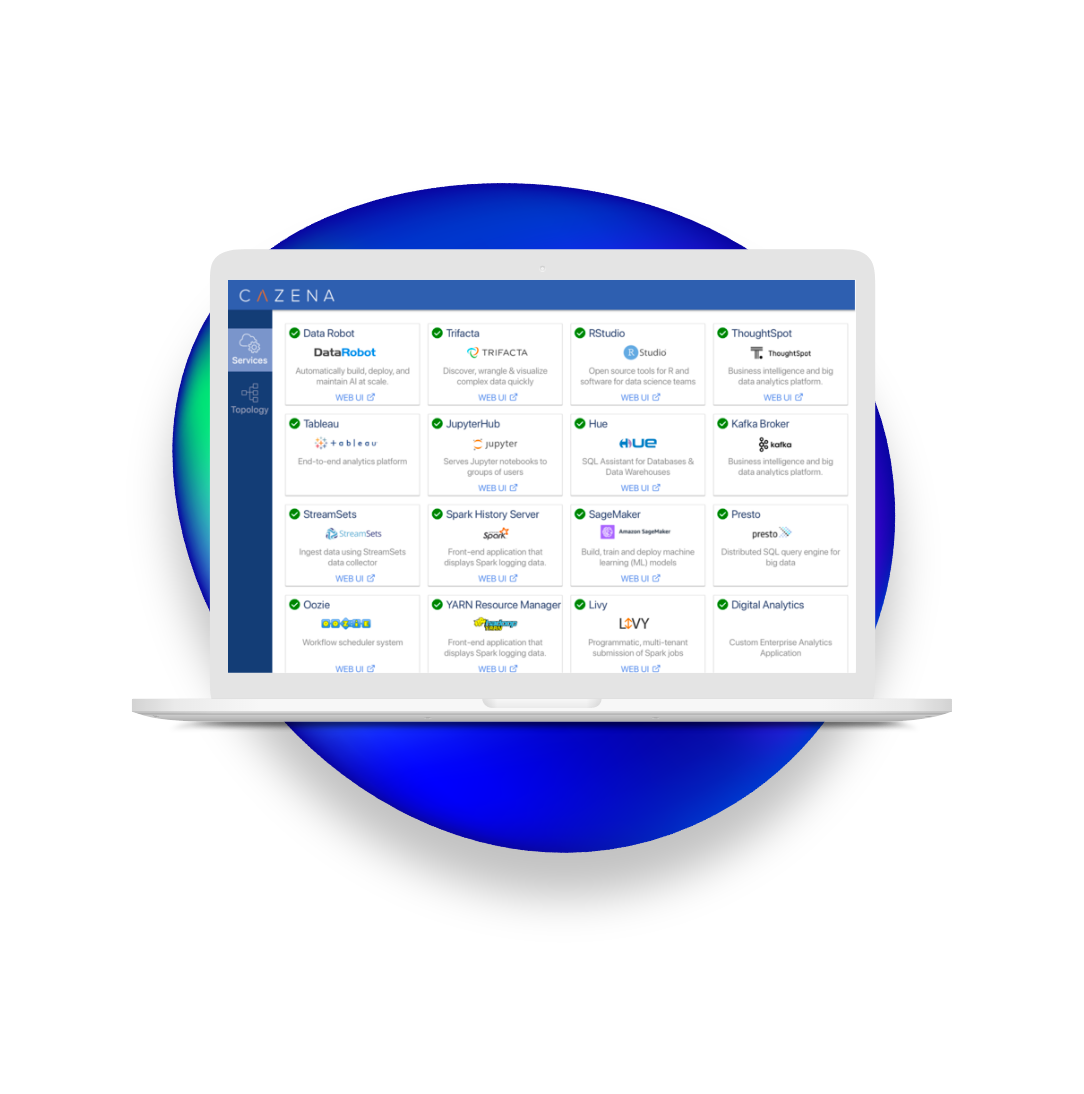

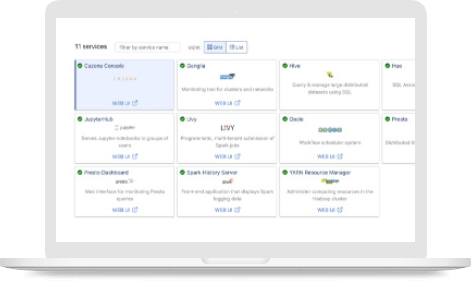

- Introduction to Cazena’s SaaS Data Lake, Prat Moghe, Cazena

- SaaS Data Lake Case Study with CWT, Gordon Coale

- Data Lake Q&A with all panelists

Presentation Transcript: Data Lake Trends

Matt Aslett

Matt Aslett

Research Vice President for Data, AI, and Analytics

451 Research

“Thank you for having us on the webinar and for everybody attending today. I’m going to kick things off by talking about the overall industry trends and the market evolution, as we see it in relation to Data Lakes, and in particular, the option of SaaS for Data Lakes.

Before I get into that, just a brief introduction to 451 Research for anybody who’s not come across the company before. We are an IT research and advisory company. We’re now in our 20th year. We’ve got a couple of hundred employees, more than half which are analysts, and we serve around 1000 clients across the industry, both the vendor community, but also corporate advisory, finance, professional services, and of course IT decision-makers. We have around 2000 technology and service providers under coverage. We spread our coverage across the entire spectrum of the industry, from the data center right out to the edge.

The team that I run is the data, AI, and analytics practice. So, what we’ve seen in that practice, and beyond in the industry at large in the last few years, is an increasing focus from organizations around trying to be data-driven. We see that companies of all shapes and sizes recognize the increasing importance of data.

Of course, companies have been making decisions based on data forever, literally for centuries. But, what we see is evolving is companies getting that data-driven decision-making really into the heart of the business — and it not just being something that’s driven by data analysts or data scientists, but actually the core of the business itself.

We see that shaping up. For example, from a 451 Survey we ran earlier this year, we see 80% of respondents saying that data is growing in importance for them. 37% of them are saying that it’s significantly more important to their organization, and we ask companies about the extent to which they’re making strategic decisions based on data, and we see almost all companies that say, “Of course I’m doing that,” some more so than others. What we’ve tended to do in the last few years is really zero in on what the 14% here where nearly all strategic decisions are data-driven, and we call these the data drivers.

These are companies out there at the leading edge of data-driven decision-making, and it’s been quite interesting to look at some of the ways in which those kind of companies are adopting technology — and also processes and different ways of organizing, much more rapidly than companies that are behind on that. We do think that has interesting ramifications.

Those companies that can adapt the quickest, to take advantage of the data they have, stand more chance of succeeding and surviving in the long-term.

Now, one of the other key trends we’ve seen over the last five to 10 plus years has been this abundance of data, data growing significantly.

We think about it as the transactional era, days gone by, obviously where the enterprise applications were the key source of data for any organization. We moved then into the interaction era. The volumes of data increased as companies started looking at e-commerce, the web server logs, mobile data, taking advantage of data that perhaps hadn’t been available to them before.

Also, what we saw was companies beginning to take advantage of data that was available to them, but for which they didn’t really have the platforms and the tools to store and process that data — or at least not to make it economically viable to do so.

What we’ve seen is Hadoop and other data platforms have made that much more economically-viable to start taking advantage of social media data, data from sensors, IoT, and AI. We’ve seen that volumes of data are continuing to grow.

Of course, though, it’s not just about how much data you have. An illustration from @gapingvoid [web comic]1 is significant.

It highlights that: It’s not just the volume of data you’ve got. It’s the way in which organizations are able to apply their knowledge, domain expertise, and insight to join the dots in that data — to turn it into business insight, to turn it into actual wisdom and unlock the value in that data. That, of course, is much easier said than done.

The way we’ve seen many organizations have been attempting to do that in the last few years is through this concept of the Data Lake.

It really emerged in the past five to 10 years as a new approach to storing, processing, and analyzing the large volumes of data, particularly that combination of structured and unstructured raw data in its native format, so that it can be accessed by multiple users for multiple purposes.

We see that the Data Lake environment has been reasonably widely adopted. This data is from the survey we ran earlier this year. As you can see here, getting towards 60% of respondents are currently using a Data Lake to some extent, some more strategically, some more tactically.

But despite fairly widespread adoption, what we’ve seen is that the Data Lake, as a concept, in some circles got itself a bit of a bad name. Not all projects and deployments have been successful.

One of the reasons we see for that is this. We use the three phases here from a South Park [television cartoon] episode many years ago with the “underpants gnomes.” [Original quote: “Phase 1: Collect underpants Phase 2: ?? Phase 3: Profit.”]2 This is highly facetious, obviously, but it’s quite important.

It tells us something about the way in which many organizations are going about their decision-making — not just companies, but also governments, as well. There is a grand idea and there’s a lot of excitement and investment, but without going through all phases of planning. Without understanding how something will be built and what the ultimate business goal of that investment will be.

The term Data Lake was first used by the founder of Pentaho, James Dixon, back in 2010. He came up with this idea of comparing data to this large body of water that’s fed by various streams, that can be accessed by multiple users for multiple purposes. You can contrast this with the traditional approach of a “data mart,” which can be considered the equivalent of bottled water — where it’s prepackaged and cleansed and packaged for a specific purpose.

What we saw is that this idea, this concept, was very attractive. But, look at this original blog post, and a lot of what was articulated and written at the time: There really wasn’t anything to explain what technically a Data Lake was, how you should go about building one, and how you should organize the data within it to get the outcomes that you were looking for.

What we saw is that many early adopters set off with this idea that, if you ingested all your data into a Hadoop environment, then you could figure it out as you went along and you’d prove the value somewhere down the line.

What many of those organizations ended up with was, instead of a Data Lake, they had a data swamp where it’s a singular environment housing large volumes of raw data, so it ticks that box, but really failed in terms of the ability of the organization to easily access that data — really for any purpose, let alone for multiple purposes.

We argued a few years ago that rather than a “Data Lake,” an alternative concept that might be useful to think about would be a “data treatment plant” — where the really important thing, for users accessing that data for multiple purposes, is the industrial-scale processes that are required to make the data that’s in there acceptable for those multiple end users, for multiple purposes. Because, there are different ways of accessing and processing that data depending on the ultimate outcome.

If you compare the beautiful image of a Data Lake versus a data treatment plan, it wasn’t an argument we were going to win. But, we have seen is a much greater focus in recent years on those industrial-scale processes that are required to turn Data Lake content from theory into reality. There are really multiple pieces that go along with that.

In particular, we’ve seen a much greater focus on data governance functionality, particularly the data catalog, which enables organizations to create an inventory to understand what is in that Data Lake. What have they put in there, and what is the data quality? Obviously, data privacy is important, in terms of understanding the nature of that data. There are security ramifications. Then, the self-service data preparation and data access to enable users to discover, integrate and abridge that data to make it available for analysis, depending on the ultimate use case.

Now, all this is pretty good for creating a nice graphical image of an architecture.

Obviously the use of Data Lakes has evolved beyond just Hadoop to even include compatible cloud storage and also relational databases as well. All of that is part of what you might consider a logical Data Lake.

We’ve also seen analytics acceleration become part of the picture, depending on the nature of the data — and data streaming, as well as data virtualization.

You can build out the components of this logical Data Lake environment, as you try to make it more useful for a particular use case.

The challenge with that is that the more pieces there are, it gets potentially very complex to orchestrate all those multiple pieces, particularly when you might be talking about multiple open-source projects and components.

What we see is that this architecture can be very brittle. It really has the potential to fragment if it’s not held together with whole load of duct tape and manual intervention.

The Data Lake, as it appears a beautiful serene environment, can actually become something that it takes up a lot of time and is very complex and doesn’t necessarily deliver the results that people are looking for. The cloud is one potential solution to alleviate at least part of that challenge. We’ve all seen that cloud computing has become much more widely adopted, particularly for data processing services, in recent years. By masking the complexity of the underlying infrastructure, and taking the requirement for configuring and managing away from the end user, there’s a potential to alleviate some of the complexity and management concerns in those environments.

As you can see here, when we look at planned adoption of data platforms, whether it’s analytic database, operational database, big data platforms, NoSQL, distributed ledger, we see that for new deployments, the adoption is shifting towards the cloud as you would expect to see.

The important thing is, when we start to look through the lens of Infrastructure as a Service, Platform as a Service, and Software as a Service — rather than just “the cloud” per se — these environments are not created equal. There are significant differences in terms of the reduction of complexity between those different environments.

Let’s think about this for a moment. Infrastructure as a Service certainly reduces the requirement on the end-user to configure and manage that underlying infrastructure.

But, the user is still responsible for adopting the financial risk and selecting the most appropriate infrastructure resources for their own particular environment.

The onus still remains on the user to actually configure and orchestrate the software environment, as well as the data processing job — even if we see some of the Infrastructure as a Service providers have begun to introduce tools to help with the configuration and resources.

Platform as a Service reduces this configuration complexity even further and begins to mask some of the financial risk, but there’s still a significant requirement on the end user to actually assemble, configure, and manage the relevant software services.

What we see is that many Platform as a Service based Data Lake offerings can really be thought of as ‘blueprints’ for how you assemble a Data Lake, rather than the finished article.

Things get a little bit more polished with the emergence of managed Platform as a Service environments, but there is another step beyond that with the Software as a Service delivery of Data Lake environments.

We’ve been talking about this for a couple of years, in terms of the growth that we anticipate for these kind of Software as a Service delivery models, particularly for large-scale data processing environments, because they have, in particular, masked that complexity and reduced that complexity.

For the companies that lack the in-house skills to manage and assemble a Data Lake themselves, or frankly, have better things for people to be doing than spending all their time managing and configuring the different components, SaaS provides a much more appropriate option.

With SaaS, we see that the service provider takes on the responsibility, both technical and financial, for configuring, managing and orchestrating, not just the underlying infrastructure but also the various software components and architecture, enabling that user to focus their attention on performing data processing and analytics. Essentially, enabling the user to focus on what it is they were trying to achieve in the first place, which is converting large volumes of data into business insight — not managing multiple components to enable them to do so.

That was a very rapid run-through of the trends in the industry environment as we see it. Just to wrap up with a couple of the keys of conclusions.

We’ve seen that the Data Lake has emerged as one of the solutions to managing large volumes of data, and it is relatively widely adopted. We think it’s only had really a 10-year lifespan. Short, when you compare it to some of the existing data platforms, which have been around for three or four times that number of years.

However, not all adoptions have been successful naturally. In particular, some of the reasons are the lack of focus on those industrial-scale processes required to make the data acceptable for multiple desired end-users, and multiple methods of processing and accessing that data.

As such, the Data Lake makes for definitely a very good theoretical architectural slide, but it’s much more complex and difficult to deliver in practice. Not to say it’s impossible, but it is definitely complex. In particular, with those multiple components that have to be stitched together, it is very brittle. It does have the potential to fragment if not held together with manual intervention and the equivalent of a lot of duct tape.

As data processing shifts to the cloud, we see that particularly the SaaS environments make that kind of capability a lot more accessible for organizations without the in-house skills to manage and configure that themselves — or thankfully with better things to do than to have their highly paid staff focus on just keeping things stitched together. True SaaS Data Lakes manage and master that complexity and enable you to concentrate on the data processing and analytics rather than underlying infrastructure and software management.

With that, I thank you very much for your time. We’ll be taking questions later, so I’m very happy to take any questions on what we’ve presented here or the wider market. For now, I’ll hand it over to Prat from Cazena to talk a little bit about how the company fits in the environment that we’ve just described.”

Next Presentation: Introducing Cazena’s SaaS Data Lake >

Sourcing / Footnotes

1: @gapingvoid, information vs knowledge, January 2014. Original source.

2: South Park, Season 2, Episode 17, “The Underpants Business.” Comedy Central. Original source.