Cloud data lakes are gaining in popularity as enterprises move to the cloud for analytics and AI/ML. Cloud data lakes bring together in a unified analytical environment several components including cloud object storage, multiple data processing engines (SQL, Spark, and others) and modern analytical tools (ML, data engineering, and BI). They allow a wide variety of business users to rapidly ingest data and run self-service analytics. While cloud data lakes can provide significant scaling, agility and cost advantages compared to the on-premises data lakes, they also introduce unique security challenges. Cloud data lake security is critical.

Data lake architecture by design combines a complex eco-system of components involved in ingesting, storing, and analyzing of data, each one a potential path by which data can be exploited. Moving this eco-system to the cloud can feel overwhelming to those who are risk-averse, but cloud data lake security has evolved over the years to a point where it can be safer, done properly, and offer significant advantages and benefits over an on-premises data lake deployment.

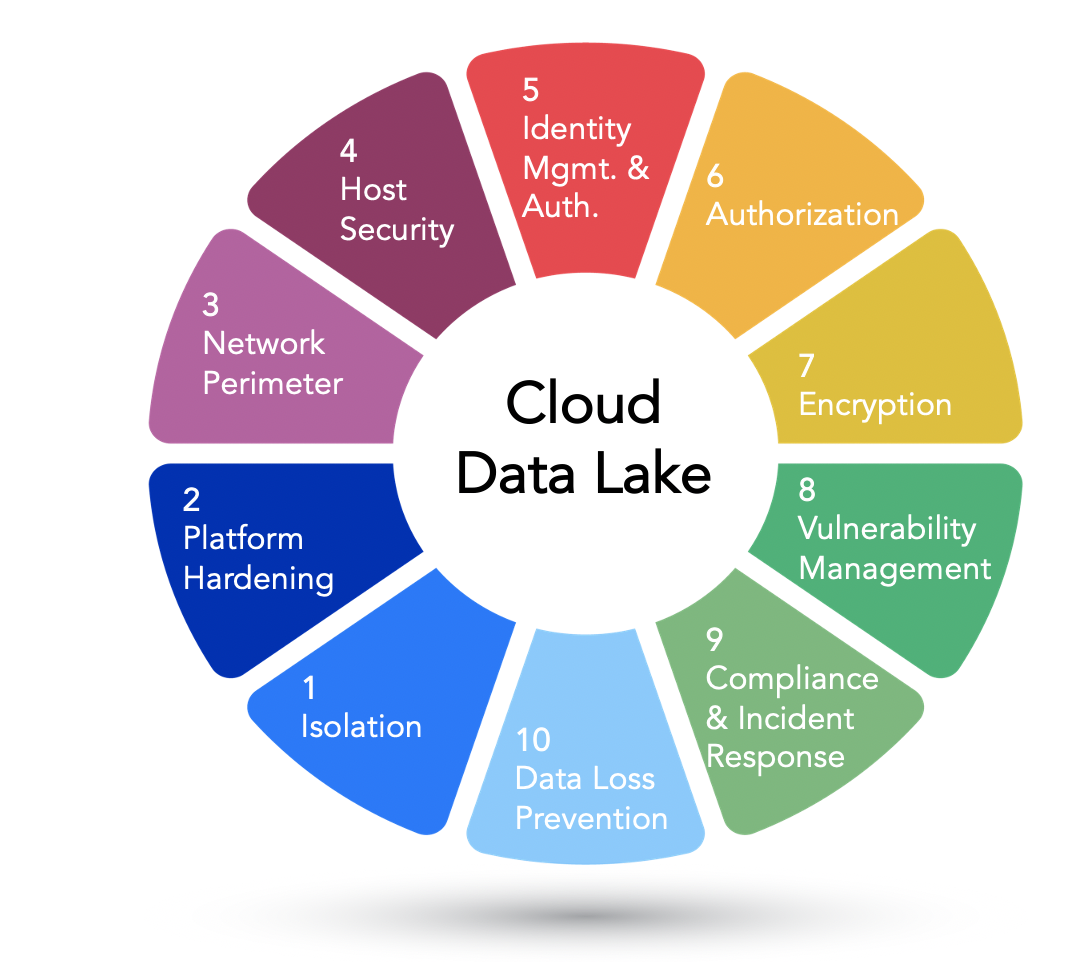

Here are 10 fundamental cloud data lake security practices that are critical to secure, reduce risk, and provide continuous visibility for any deployment. When possible, we will use AWS as a specific example of cloud infrastructure and the data lake stack, though these practices apply to other cloud providers and any cloud data lake stack.

1. Security Function Isolation

Consider this practice the most important function and foundation of your cloud security framework. The goal, described in NIST Special Publication, is designed to separate the functions of security from non-security and can be implemented by using least privilege capabilities. When applying this concept to the cloud your goal is to tightly restrict the cloud platform capabilities to their intended function. Data Lake roles should be limited to managing and administering the data lake platform and nothing more. Cloud security functions should be assigned to experienced security administrators. There should be no ability of data lake users to expose the environment to significant risk. A recent study done by DivvyCloud found one of the major risks with cloud deployments that lead to breaches are simply caused by misconfiguration and inexperienced users. By applying security function isolation and least privilege principle to your cloud security program, you can significantly reduce the risk of external exposure and data breaches.

2. Cloud Platform Hardening

Isolate and harden your cloud data lake platform starting with a unique cloud account. Restrict the platform capabilities to limit functions that allow administrators to manage and administer the data lake platform and nothing more. The most effective model for logical data separation on cloud platforms is to use a unique account for your deployment. If you use the Organization Management Service in AWS, you can easily add a new account to your organization. There’s no added cost with creating new accounts, the only incremental cost you will incur is using one of AWS’s network services to connect this environment to your enterprise.

Once you have a unique cloud account to run your data lake service, apply hardening techniques outlined by the Center for Internet Security. For example, CIS guidelines describe detailed configuration settings to secure your AWS account. Using the single account strategy and hardening techniques will ensure your Data Lake service functions are separate and secure from your other cloud services.

3. Network Perimeter

After hardening the cloud account, it is important to design the network path for the environment. It’s a critical part of your security posture and your first line of defense. There are many ways you can solve securing the network perimeter of your cloud deployment, some will be driven by your bandwidth and/or compliance requirements which dictate using private connections, or using cloud supplied VPN (Virtual private network) services and backhauling your traffic over a tunnel back to your enterprise.

If you are planning to store any type of sensitive data in your cloud account and are not using a private link to the cloud, traffic control and visibility is critical. Use one of the many enterprise firewalls offered within the cloud platform marketplaces. They offer more advanced features that work to complement native cloud security tools and are reasonably priced. You can deploy a virtualized enterprise firewall in a hub and spoke design, using a single or pair of highly available firewalls to secure all your cloud networks. Firewalls should be the only components in your cloud infrastructure with public IP addresses. Create explicit ingress and egress policies along with intrusion prevention profiles to limit the risk of unauthorized access and data exfiltration.

4. Host-based Security

Host-based security is another critical and often overlooked security layer in cloud deployments.

Like the functions of firewalls for network security, host-based security protects the host from attack and in most cases serves as the last line of defense. The scope of securing a host is quite vast and can vary depending on the service and function. A more comprehensive guideline can be found here.

- Host intrusion detection– This is an agent-based technology running on the host that uses various detection systems to find and alert on attacks and/or suspicious activity. There are two mainstream techniques used in the industry for intrusion detection the most common is signature-based which can detect known threat signatures. The other technique is anomaly-based which uses behavioral analysis to detect suspicious activity, which would otherwise go unnoticed with signature-based techniques. A few services offer both in addition to machine learning capabilities. Either technique will provide you with visibility on host activity and give you the ability to detect and respond to potential threats and attacks.

- File integrity monitoring (FIM)- A critical requirement in many regulatory compliance frameworks. The capability to monitor and track file changes within your environments. These services can be very useful in detecting and tracking cyberattacks. Since most exploits typically need to run their process with some form of elevated rights, they need to exploit a service or file that already has these rights. An example would be a flaw in a service that allows incorrect parameters to overwrite system files and insert harmful code. An FIM would be able to pinpoint these file changes or even file additions and alert you with details of the changes that occurred. Some FIMs provide advanced features such as the ability to restore files back to a known good state or identify malicious files by analyzing the file pattern.

- Log Management- Analyzing events in the cloud data lake is key to identifying security incidents and is the cornerstone of regulatory compliance control. Logging must be done in way that protects the alteration or deletion of events by fraudulent activity. Log storage, retention and destruction policies are required in many cases to comply with Federal legislation and other compliance regulations.

The most common method to enforce log management policies is to copy logs in real-time to a centralized storage repository where they can be accessed for further analysis. There’s a wide variety of options for commercial and open-source log management tools, most of them integrate seamlessly with cloud-native offerings like AWS CloudWatch. CloudWatch is a service that functions as a log collector and includes capabilities to visualize your data in dashboards. You can also create metrics to fire alerts when system resources meet specified thresholds.

5. Identity Management & Authentication

Identity is an important foundation to audit and provide strong access control for cloud data lakes. When using cloud services the first step is to integrate your identity provider, ex: Active Directory, with the cloud provider. For example, AWS provides clear instructions on how to do this using SAML 2.0. For certain infrastructure services, this may be enough for identity. If you do venture into managing your own 3rd party applications or deploying data lakes with multiple services, you may need to integrate a patchwork of authentication service such SAML clients and providers, such as Auth0, OpenLDAP, and possibly Kerberos and Apache Knox. For example, AWS provides help with SSO integrations for federated EMR Notebook access. If you want to expand to services like Hue, Presto, or Jupyter you can take refer to third-party documentation on Knox and Auth0 integration.

6. Authorization

Authorization provides data and resource access controls as well as column level filtering to secure sensitive data. Cloud providers incorporate strong access controls into their PaaS solutions via resource-based IAM policies and RBAC which can be configured to limit access control using the principle of least privilege. Ultimately the goal is to centrally define row and column-level access controls. Cloud providers like AWS have begun extending IAM and provide data and workload engine access controls such as Lake Formation, as well as increasing capabilities to share data between services and accounts. Depending on the number of services running in the cloud data lake, you may need to extend this approach with other open-source or 3rd party projects such as Apache Ranger to ensure fine-grained authorization across all services.

7. Encryption

Encryption is fundamental to cluster and data security. Implementation of best encryption practices can generally be found in guides provided by cloud providers. It is critical to get these details correct and doing so requires a strong understanding of IAM, key rotation policies, and specific application configurations. For buckets, logs, secrets, and volumes, and all data storage on AWS you’ll want to familiarize yourself with KMS CMK best practices. Make sure you have encryption for data in motion as well as at rest. If you are integrating with services not provided by the cloud provider, you may have to provide your own certificates. In either case, you will also need to develop methods for certificate rotation, likely every 90 days.

8. Vulnerability Management

Vulnerability Management- Regardless of your analytic stack and cloud provider, you will want to make sure all the instances in your data lake infrastructure have the latest security patches. A regular OS and packages patching strategy should be implemented, including periodic security scans of all the pieces of your infrastructure. You can also follow security bulletin updates from your cloud provider (ex. Amazon Linux Security Center) and apply patches based on your organization’s security patch management schedule. If your organization already has a vulnerability management solution you should be able to utilize it to scan your data lake environment.

9. Compliance Monitoring and Incident Response

Compliance monitoring and incident response is the cornerstone of any security framework for early detection, investigation, and response. If you have an existing on-premises security information and event management (SIEM) infrastructure in place, consider using it for cloud monitoring. Every market-leading SIEM system can ingest and analyze all the major cloud platform events.

Event monitoring systems can help you support compliance of your cloud infrastructure by triggering alerts on threats or breaches in control. They also are used to identify Indicators of Compromise (IOC).

10. Data Loss Prevention

To ensure integrity and availability of data, cloud data lakes should persist data on cloud object storage (ex. Amazon S3), with secure cost-effective redundant storage, sustained throughput, and high availability. Additional capabilities include object versioning with retention lifecycles that can enable remediation of accidental deletion or object replacement. Each service that manages or stores data should be evaluated for and protected against data loss. Strong authorization practices limiting delete and update access are also critical to minimizing data loss threats from end-users. In summary, to reduce the risk for data loss create backup and retention plans that fit your budget, audit, and architectural needs, strive to put data in highly available and redundant stores, and limit the opportunity for user error.

Conclusion: Comprehensive Data Lake Security is Critical.

The cloud data lake is a complex analytical environment that goes beyond storage and requires expertise, planning, and discipline to be effectively secured. Ultimately enterprises own the liability and responsibility of their data and should think of how to convert cloud data lakes into their “private data lakes” running on the public cloud. The guidelines provided here aim to extend the security envelope from the cloud provider’s infrastructure to include enterprise data.

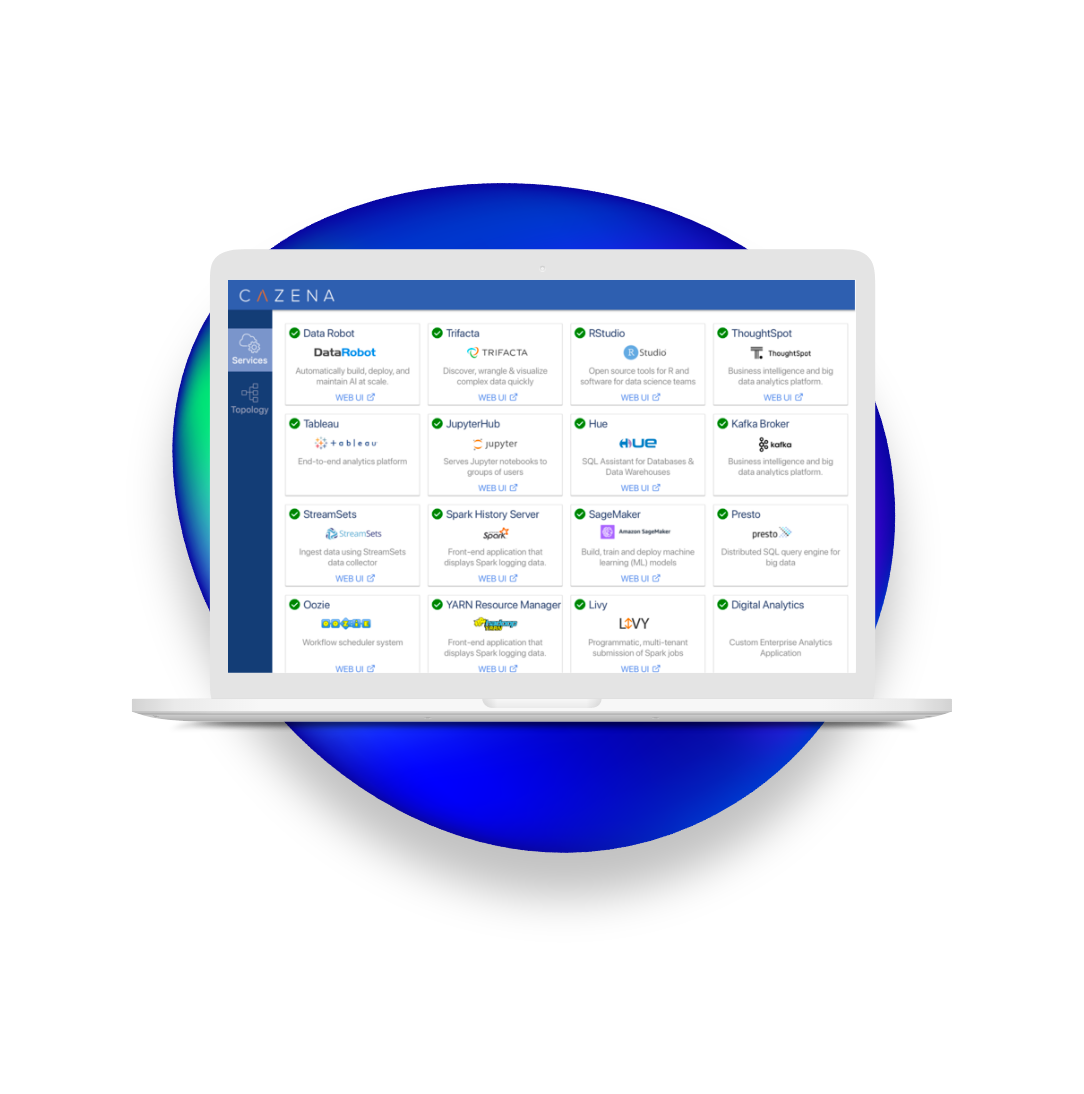

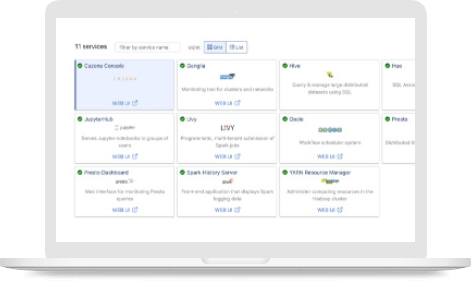

To learn more about an automated approach to secure Cloud Data Lakes, refer to whitepaper on Cazena’s Instant Data Lake. To see a demo of security controls and architecture for a secure production-ready Cloud Data lake, request hands-on access to Try Cazena.