Series: The Hidden Challenges of Putting Hadoop and Spark in Production

A recent Gartner survey estimates that only 14% of Hadoop deployments are in production. We’re not surprised. We’ve been in many conversations with companies that have been piloting Hadoop to bolster their analytic capabilities beyond relational databases. These companies want to use new distributed processing frameworks, like Apache Spark, which allow them to process data more efficiently, especially data that is inconsistently or variably structured.

The pilots typically involve finding a few existing servers within the datacenter and repurposing them to support Hadoop. There is often minimal infrastructure investment for trying out these new technologies, and sometimes, it’s not even an official project.

But the stories often continue similarly: The pilot shows promising results, and a series of meetings leads to a set of potential use cases. A project is established, the clock starts ticking, and the realization sets in:

Building a production-grade configuration for Hadoop is a non-trivial exercise. Whether on-premises in a local data center, or in the public cloud, putting Hadoop in production requires expertise and experience to get right.

Common challenges fall into a few important categories, which we’ll explore in this blog series:

- Infrastructure: Choosing and configuring servers for Hadoop

- Performance Optimization: Scaling and tuning Hadoop for price-performance

- To Cloud or Not: Selecting, configuring and new challenges

- Security: What to consider

Choosing the Right Infrastructure for Hadoop

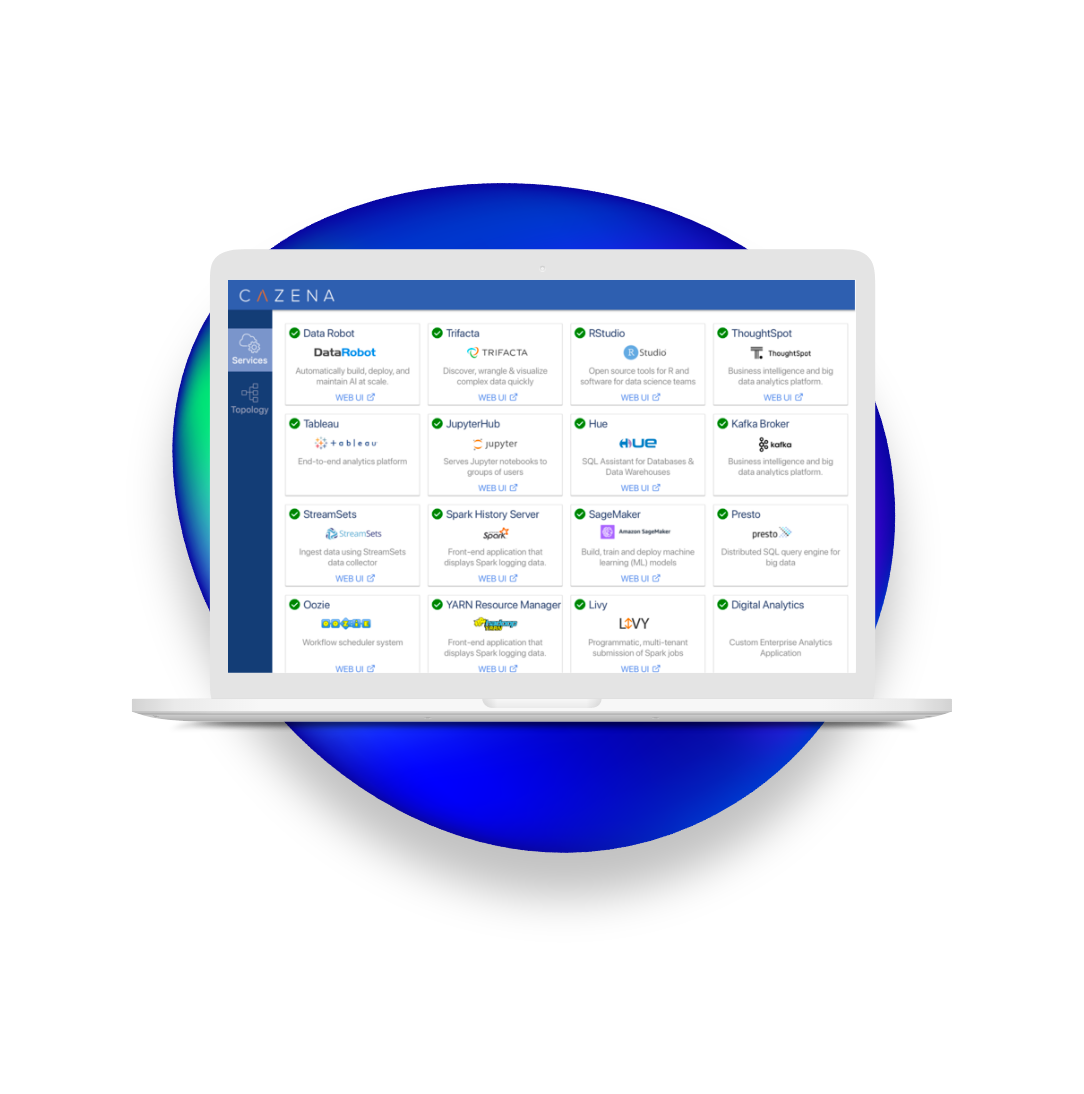

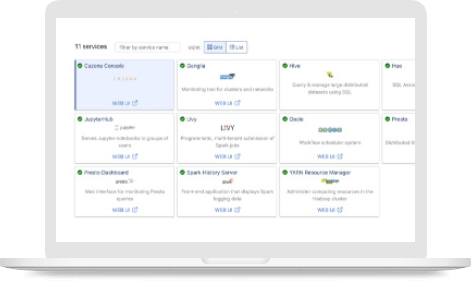

First, you need to know what type of machines will support the Hadoop cluster. In the single-engine database world (Oracle, GPDB, Vertica, etc.), this is a constrained problem. Yet in the Hadoop world, it is not as simple. That’s because the Hadoop ecosystem has multiple processing engines and components from multiple vendors and open source projects. The list is long: Apache, Hortonworks, Cloudera, Spark, Impala, MapReduce, etc. The different engines are optimized for different types of workloads.

One company we spoke with started their pilot using MapReduce (M/R) as the primary engine. However, they decided that Cloudera Spark’s in-memory capabilities made it the faster, more appropriate processing engine for the problem they needed to solve. That’s when they learned the hard way that the optimal infrastructure for a M/R dominant Cloudera Hadoop configuration is different from a Spark dominant one due to the additional memory requirements. That meant they needed to reconfigure the existing hardware by buying new memory modules, complicating and slowing down the project.

Keeping up with a (Very) Rapidly Evolving Ecosystem

The underlying technologies in the Hadoop ecosystem are also rapidly evolving and forking-off as new projects start and vendors add in their own components. This makes things even harder to manage and plan for. Consider not long ago, in 2015, Impala (Cloudera’s SQL engine for Hadoop), did not exploit multiple cores. That’s a big deal when you’re trying to optimize performance. Building a configuration with the best price for performance for that Impala release meant lots of machines with very few cores. But today Cloudera Impala exploits multiple cores – so the new configuration for maximum performance at the lowest cost would be fewer machines with more cores. Totally different. That’s just one example of how rapidly technology is evolving.

If you’re trying to keep up with that in your datacenter, you’re constantly optimizing. It becomes incredibly difficult to choose the right type of server for on-premises deployments and manage capacity planning. In enterprise environments, servers are typically expected to last a minimum of 3 years, with the norm being closer to 5 years. Without a crystal ball, many companies overbuy hardware. They get really powerful machines “just to be safe,” even though that power and capacity might sit idle for years.

In our next blog, we’ll talk more about a closely related subject: Hadoop performance, sizing and scaling.

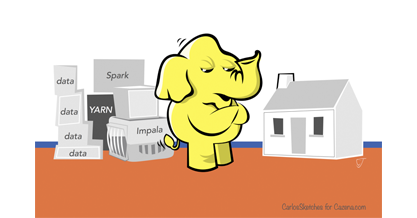

Original artwork by Carlos Joaquin in collaboration with Cazena.

Apache®, Apache Hadoop, Hadoop®, and the yellow elephant logo are either registered trademarks or trademarks of the Apache Software Foundationin the United States and/or other countries.